Towards a Value-Complemented Framework for Enabling Human Monitorin in Cyber-Physcial Systems.

Zoe Pfister, Michael Vierhauser, Rebekka Wohlrab and Ruth Breu

CPS are increasingly prevalent in our society - they combine the digital and physical parts of a system

CPS regularly operatae in uncertain (safety critical) environments and interact with humans.

Runtime monitorign for hardware and softwre is well-established. But not many approaches talking about monitoring interaction and security of humans.

Ross, Winstead and McEvilley paper cited on this paper.

Monitoring Humans as part of CPS interactions: it raises concerns about privacy and data misuse,w hich inpacts huma-machine collaboration.

Cases of Amazon and Fosrestry comapny breaching people's privacy:Integrating human values - privacy, security, or self-drection into the requirements engineering process.

Background/Baseline

Schwartz theory of basic values contains 10 universal values groupd into: Opennes to chagen, Sefl-transcendence, self-enhancement and conservation.

Whittle et al. levaeraged Schwartz taxonomy to enhance the RE process through value portraits.

Values caputre the why of RE, complementing the what (FR) and how(NFR)

Next steps:

Continuous value validation

- Validate the requirements and their implmentation during ssystem operation - monitor the monitors

- traceability

- participatory design study with real-world stakeholders

- monitoring value tactics taxonomy

- develop a catalogue of montiroing tactics

- define how specific tactics can be translated to monitoring req.

- empirical studies with companies

ethics-aware requirements elicitation process: more detail on the paper about this future work

*Q: How to account for cultural differences? For example, countries that do not value privacy.

The values might be different and they might even be negotiated beforehand. But that does not invalidate the research. The general steps for a value-based RE can be reused across cultures, provided that we acknowledge that differences in cultures and values exist.

Integrating Critical Systems Heuristics and Ethical Guidelines for Autonomous Vehicles. Amna Pir Muhammad, Irum Inayat and Eric Knauss

Context:

- AVs raise complex ethical challenges (e.g., safety, inclusion, societal impact)

- Design must go beyond technical performance - they should address societal values

- Lack of practical methods to apply ethical principles in early stages of RE.

RQ:How can we support ethics-aware requriemetns egnieering for AV?

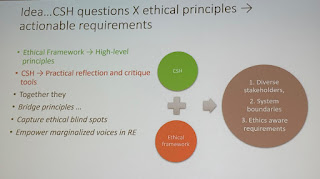

Research Vision: Integrate Guizzardi's Ethical Framewok with Critical System Heuristics (CSH) - Guizzardi et al.

- Support RE by: identifying diverse stakeholders needs;

- defining system boundaries and

- embedding ethics in early stages in RE.

Critical Systems Heuristics (CSH) - Ulrich and Reynolds

- 12 boundary questions

- focused on stakeholders perspective

In the paper, they present a big table showing the questions and the rational behind them, mapping the adopted two approaches: Ethical Fwk + CSH.

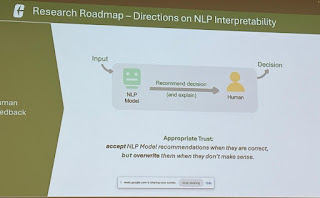

Research Roadmap

- stakeholders wrokshop for validation

- template for ethical requirement elicitation

- empirical studies on real-world applications

- resolving ethical trade-offs (e.g. safety vs. fairness)

Q: Are the questions on system-level, feature-level or use-case-level?

General domain, sub-divide them case by case.

Stakeholders need guidance to undertand the kind of system being developed and properly express requirements.

Limitation: they don't yet have consensus on the questions, there is still much disagreement about them (work in progress)

Methodology: They do not follow any particular methodology so far, a possible direction is to use Design Science but they are not sure yet.

Veracity Debt: Practitioners Voices on Managing Software Requirements concerning Veracity

Judith Perera, Ewan Tempero, Yu-Cheng Tu and Kelly Blincoe

Veracity - a multi-faceted concept ralted to the notions of trust, trust, authenticity and demonstrability

- Is it the truth? can we trust it?

- Is it genuine? What's its origin?

- How can its credibility be demonstrated?

They used to do works for certification of supply chains. And then they decided to apply this to software development as well.

She showed some demografics about the participants in the survey.

Survey results:

1) Most common type of veracity req. data veracity: (86%), regulatory and process followed)

2) What these req. impact:

- software architecture (12/38)

- end user (15/38)

- software company (18/38)

*She presented an interesting discussion slide (more on the paper)

Future work

- interviews to clarify results of the survey

- proposal for Veracity as a disticnt quality attribute.

Limitations:

- Some important demographic data is not presented (e.g., participants' country). she said that some of the demographic questions were optional (country for instance) so it would be unfair to present them, since you can pinpoint that info for some but not all.

- It is questionable how they relate to quality attributes. There is a lot of overlap and they do not consider this. She says that they will address this in future work by examining the literature and by talking to people from different backgrounds.

It is interesting but alo complex to have so many umbrella concepts. For exmaple, Veracity and Trust, Reliability, Security etc.

Is there a possibility to mine software database (in open source software) to check if there are veracity requirements there? She says: absolutely! They are planning on doing exactly this (besides the interviews)